Broadly, the prime focus of my research is the interplay among probability theory, dynamical systems, and statistics. I investigate

- statistical properties of dynamical systems and Markov processes,

- inferential statistics in the context of dynamical systems, and

- higher order asymptotics for limit theorems

using spectral theory and a combination of techniques from classical probability theory, stochastic analysis, statistics, and dynamical systems. Recently, I have started looking at

- applications of machine learning to problems related to my main research interests.

So far, I have formed research connections not only with dynamicists and probabilists but also with machine learning researchers and statisticians, and I am actively searching for interdisciplinary research opportunities.

Below, I briefly discuss some of my research interests under three themes. The resulting publications are listed here.

Invariants and Universality

When studying physical systems with many degrees of freedom, it is impossible to keep track of microscopic behaviour based on physical laws. Imagine writing equations of motion for about $6 \times 10^{23}$ gas particles (about 1 mole) with inter-dependencies and solving that system of equations!

Statistical Physics is the study of how these particle-particle interactions describe systems on a macroscopic scale. One can calculate observable properties of a system either as averages over phase trajectories (Ergodic Theory) or as averages over an ensemble of systems, each of which is a copy of the system (Thermodynamics). In either case, invariant measures of systems play a crucial role, and showing their existence (and uniqueness) of them is a problem of importance. In fact, the ergodic invariant measures can be used to describe the statistics of a system entirely.

Many systems have the extra layer of difficulty of the presence of interacting components that vary on disparate timescales. Fast-slow partially hyperbolic systems (on the torus) investigated by Jacopo De Simoi and Carlngelo Liverani during the last decade are the simplest but theoretically non-trivial examples of this kind. I have an ongoing project with Jacopo De Simoi about the existence of invariant measures and their decay of correlations for fast-slow partially hyperbolic systems with positive centre Lyapunov exponents.

Some systems are deterministic flows with discontinuities at random times at which they make random jumps. Such Piece-wise Deterministic Markov Processes have numerous applications to science and engineering. I am interested in studying the existence of invariant measures in such systems. Drawing parallels with some of my previous work, Pratima Hebbar and I expect that statistical properties of switching systems can be studied.

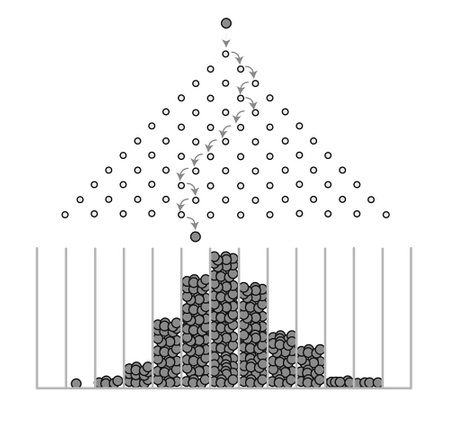

The Central Limit Theorem, a widely studied statistical property of systems, is an example of universality where, due to aggregation of microscopic information, at the macroscopic scale the averaged asymptotic behaviour of the system follows a bell curve independent of the underlying mechanism of information generation. Universality has deep consequences for applications in very applied fields like Biology and Economics as well as more theoretical fields like Number Theory. With Tanja Schindler, we proved the Central Limit Theorem for the real part, imaginary part and the absolute value of the Riemann-zeta function sampled over a class of dynamical systems on $\mathbb{R}$ with heavy-tailed invariant measures. This result has potential number theoretic applications because it extends well-known results on sampling the Lindelöf hypothesis.

Higher-order Asymptotics

Ergodic Theory asserts that most of the realizations of an ergodic system distribute according to an ergodic invariant measure. This fact has far-reaching consequences for practical applications that range from celestial mechanics to molecular dynamics and drug design. However, for these finite-time applications, exact asymptotics of universality (including probabilistic limit laws) describing systems are required.

With Dmitry Dolgopyat, we extended the existing theory of Edgeworth expansions (higher-order asymptotics of the Central Limit Theorem) for independent and identically distributed random variables to encompass typical discrete random variables and provided precise descriptions of the failure of such expansions. The next natural question is whether it is possible to describe the error in the Central Limit Theorem when we pick a particular discrete random variable instead of describing typical errors. This is an ongoing project.

As an extension of one of my previous projects with Carlngelo Liverani, Françoise Pène and I introduced a general theory of Edgeworth expansions and higher-order asymptotics in the Mixing Local Limit Theorems for weakly dependent for (possibly unbounded) random variables arising as observations from mostly hyperbolic dynamical systems or Markov processes. The hyperbolic systems that we discuss like Sinai billiards and piece-wise expanding maps are natural models in many applications: billiard models in optics, acoustics, and classical mechanics, and expanding maps in random number generators, biological and medical models to name a few. There is an ongoing project with Françoise Pène on generalizing this theory further to include systems that exhibit intermittent behaviour.

With Pratima Hebbar, we look at the higher-order asymptotics of Large Deviations that describe the extremal behaviour of orbits. In particular, this theory leads to the exact description of the asymptotics of fundamental solutions of parabolic PDEs that model Branching Diffusion Processes.

Computing Precise Estimates

Having exact asymptotics of the statistical behaviour alone is insufficient for applications. The problem of finding more accurate and more precise estimates of parameters in systems appears naturally in many areas, including machine learning, physics, econometrics, and engineering. Moreover, one should be able to apply tools from statistics to limited dynamically generated data and obtain meaningful inferences.

Even though there has been significant progress in the application of tools from inferential statistics to deterministic dynamics, the bootstrap, a versatile tool that achieves higher-order estimation accuracy than the normal approximation, had not been implemented until my work with Nan Zou. Our bootstrap enables one to evaluate the randomness of estimates of important dynamical quantities like the top Lyapunov exponent and metric entropy by constructing their 95% confidence intervals. Currently, we are working on coming up with better estimates for spectral densities of dynamical systems.

In recent years, with the proliferation of machine learning research, modeling and prediction of real-world phenomena have progressed to the next level. The problem of computing estimates for an unknown probability distribution using sample data is crucial for applications; for example, computing estimates of invariant densities of dynamical systems. In machine learning, Normalizing flow (NF) is a supervised learning procedure that determines the probability distribution of sample data by performing a maximum likelihood optimization. With Sameera Ramasinghe, we introduced a framework to construct NFs that demonstrate higher robustness against initial errors. Even though NFs are susceptible to numerical instabilities, this is the first time robustness of NFs is investigated in the literature.